Preprocessing Vibration Data for Machine Learning

Machine learning is a branch of artificial intelligence that enables computers to learn from data and make decisions without explicit programming. By applying machine learning models to large datasets, we can predict outcomes, identify trends, and uncover hidden insights.

The high frequency nature of vibration data can offer unique insights to inform machine learning systems. This is especially true when advanced analytics (FFT’s, PSD’s, Pseudo Velocity, etc.) are used to extract key features from vibration data.

These unique insights can range from system performance parameters to predictive maintenance capability. Another benefit of vibration data is that it is relatively inexpensive to acquire compared to ultrasonics, oil analysis and other sensing solutions that can offer predictive capability.

By using vibration data in conjunction with machine learning models, companies can predict failures before they happen, optimize manufacturing processes, and enhance product quality with a level of precision that wasn't possible before.

Fig. 1. Repair cost over time.

By the end of this blog, you will have a clear understanding of the necessary steps to prepare vibration sensor data for use in machine learning and be equipped with practical techniques to improve your model’s performance.

We will explore the key steps involved in preparing raw data acquired from vibration sensors for machine learning applications.

For the examples you see in this blog we used an enDAQ data logger to capture the vibration data, however, any high-performance vibration data logger will suffice. But before this raw data can be used to train machine learning models, it needs to undergo several preprocessing stages.

Proper data preparation ensures that the data is clean, accurate, and formatted in a way that maximizes the effectiveness of ML algorithms.

We will cover the following essential topics in this guide:

Tools, environment and libraries

We used Python 3 and the Jupyter Notebook environment by Anaconda for this work. The following libraries have been imported for various tasks:

- decimal, idelib, and endaq – These libraries are used for reading and processing EnDAQ sensor data.

- numpy – A fundamental library for numerical operations, used for handling arrays and mathematical computations.

- pandas – A powerful library for data manipulation and analysis, used for working with data structures like DataFrames.

- seaborn – A data visualization library based on Matplotlib, used for creating informative and attractive statistical graphics.

- matplotlib – A popular plotting library used to create static, animated, and interactive visualizations.

- plotly – An interactive plotting library that allows you to create dynamic and responsive charts.

- fft – A module in SciPy that provides efficient functions for computing the Fast Fourier Transform (FFT) and its inverse, enabling the analysis of signals in the frequency domain.

- signal – A module in SciPy that provides functions for signal processing, including filtering, spectral analysis, and operations on signals such as convolution, windowing, and designing filters.

# Import librariesimport decimalimport idelibimport endaqimport numpy as npimport pandas as pdimport seaborn as snsimport matplotlib.pyplot as pltimport plotly.graph_objects as goimport scipy.signal as signalfrom plotly.subplots import make_subplotsfrom scipy.fft import fftfrom scipy.signal import find_peaks

Testing machine and sensor type

As mentioned earlier, EnDAQ is capable of collecting a variety of data types, which are provided in the S and W series. For the analysis in this blog, an S series EnDAQ was mounted on a motor using double-sided tape, as shown in the figure below. Data was collected while the system ran for a period of time, and the results were saved on a local PC. Vibrations along the X, Y, and Z axes, with a sample rate of 4052.60 Hz, were measured and analyzed. Temperature data, sampled at 1.01 Hz, was also used just to demonstrate how resampling works.

Fig. 2. EnDAQ sensor mounted on a rotating system.

# read data doc = endaq.ide.get_doc(r'C:\HAMED\3.SmartBelt\FFT\R704_high speed_driven_SSS10071_056.ide')

endaq.ide.get_channel_table(doc)

Which will return the table below:

It should be noted that vibration data along the X, Y, and Z axes are collected with a 40g accelerometer at 4000 Hz, and the 500g accelerometer at 20000 Hz. Since we are looking at low g, low frequency vibrations, we can just use the 40g accelerometer. The smaller data size will make these demo scripts execute more quickly.

The data is extracted and converted into a Pandas DataFrame for significantly easier handling. First, the acceleration channel is selected, and a Pandas DataFrame is created containing the acceleration values for all three axes:

## Acceleration dataacc_df = endaq.ide.get_primary_sensor_data(doc=doc.channels[80], measurement_type="acceleration", time_mode="datetime")# Display the final DataFramedisplay(acc_df)

The output will be the table below:

This process can be applied to convert each (or multiple) channel(s) into a Pandas DataFrame. Let's also apply it to the Temperature channel:

## Temperature datatemp_df = endaq.ide.get_primary_sensor_data(doc=doc, measurement_type="temperature", time_mode="datetime")temp_df

Where the output will be:

Resampling

As you may be aware, the number of acceleration data points (sample rate: 4000, number of datapoints: 1,362,231) does not match the number of temperature data points (sample rate:1, number of datapoints:338). This discrepancy is due to the different sample rates of each sensor. Specifically, if the number of data points recorded for one variable is fewer than that of another, the sample rate of the first variable will be lower. In such cases, which are common in sensor data, resampling techniques are used to align the data. If the sample rate needs to be increased, we perform upsampling, and if the sample rate needs to be decreased, we use downsampling.

Generally, upsampling is preferred over downsampling because downsampling can result in the loss of valuable data. In this case, we upsample the temperature data to match the sample rate of the acceleration data, ensuring that both datasets are aligned without losing information.

# Resample temperature data to match the length of the acceleration data

# Assuming we're resampling the temperature data to match the acceleration timestamp index

temp_resampled_df = temp_df.reindex(acc_df.index, method='nearest') # Use nearest to align timestamps

Finally, the resampled temperature data is concatenated with the acceleration data to create a unified dataset that includes both acceleration and temperature, now aligned to the same sample rate.

# Add the resampled temperature values to the very right column of acc_df# Assuming temp_resampled_df is aligned with acc_df (same index)endaq_df = acc_df.copy() # Create a copy of acc_dfendaq_df['Temperature'] = temp_resampled_df.iloc[:, 0] # Add the first column of resampled temperature data# Display the new DataFramedisplay(endaq_df)# Optionally, save the DataFrame to a CSV or other file format if needed# endaq_df.to_csv('endaq_data.csv', index=True)

Note that EnDAQ provides a function for resampling endaq.calc.utils.resample(df, sample_rate=None). For more details, please refer to the EnDAQ documentation.

Data Visualization

Visualizing data is crucial for gaining preliminary insights. Various types of plots can be used to explore the data and identify patterns. For example, a time series plot can help visualize any increase or decrease in a variable over time. Both Matplotlib and Plotly can be used to plot variables over time. The key difference between the two is that Plotly offers interactive features, allowing users to zoom in, zoom out, and interact with the data more dynamically, while Matplotlib is more static.

In the subplot shown below, the first plot displays the changes in acceleration across the X, Y, and Z axes, while the second plot compares the resampled temperature data with the original temperature data.

# Plot the original and resampled temperature data alongside the acceleration dataplt.figure(figsize=(12, 6))# Plot acceleration data (for X (40g), Y (40g), Z (40g))plt.subplot(2, 1, 1)plt.plot(acc_df.index, acc_df["X (40g)"], label="X (40g)", alpha=0.7)plt.plot(acc_df.index, acc_df["Y (40g)"], label="Y (40g)", alpha=0.7)plt.plot(acc_df.index, acc_df["Z (40g)"], label="Z (40g)", alpha=0.7)plt.xlabel("Timestamp")plt.ylabel("Acceleration (g)")plt.legend(loc="upper left")plt.title("Acceleration Data (X, Y, Z)")# Plot temperature dataplt.subplot(2, 1, 2)plt.plot(temp_df.index, temp_df.iloc[:, 0], label="Original Temperature", alpha=0.7, color='orange', marker = 'o') # Originalplt.plot(temp_resampled_df.index, temp_resampled_df.iloc[:, 0], label="Resampled Temperature", alpha=0.7, color='green') # Resampledplt.xlabel("Timestamp")plt.ylabel("Temperature (°C or other units)")plt.legend(loc="upper left")plt.title("Temperature Data (Original vs Resampled)")plt.tight_layout()plt.show()

Fig. 3. Visualizing changes in X, Y, and Z vibration (top) and temperature (bottom) plot.

For the sake of simplicity, a small portion of the data will be considered from now on, specifically the data between rows 500,000 and 600,000.

# Select rows from index positions 500,000 to 600,000 (inclusive of 500,000 and exclusive of 600,000)selected_endaq_df = endaq_df.iloc[500000:600000]# Display the selected rowsdisplay(selected_endaq_df)

Data Analysis

Data analysis involves examining data to extract meaningful insights. This process can be performed using various techniques, such as plots, tables, and statistical parameters.

The temperature time series plot, for example, shows that running the rotating machine causes the temperature to increase over time. More specifically, the temperature rises slightly (from 28.5˚C to 30.5˚C) during the first 2 minutes, then it remains relatively constant for the next 4 minutes.

Histogram

Let's take a look at data histograms. Histograms can shed light on how data is distributed, or more simply, they show the range within which each parameter varies.

def plot_histograms(df, columns, bins=50, figsize=(12, 6)):"""Plots histograms for the selected columns in the DataFrame, with two columns per row,and adds a density line on top of the histogram.Parameters:df (pandas.DataFrame): The DataFrame containing the data.columns (list): List of column names to plot histograms for.bins (int): The number of bins for the histograms.figsize (tuple): The size of the plot."""# Calculate the number of rows needed (each row has 2 columns)rows = int(np.ceil(len(columns) / 2))# Set up the plotplt.figure(figsize=figsize)# Plot histograms for each selected columnfor i, column in enumerate(columns):# Create subplot with 2 columns per rowplt.subplot(rows, 2, i+1)# Plot histogram with seabornsns.histplot(df[column], bins=bins, color="green", edgecolor='black', stat="density", alpha=0.7)# Add the KDE line separately using sns.kdeplotsns.kdeplot(df[column], color="red", fill=False, linewidth=2) # Separate KDE line# Add title and labelsplt.title(f"Histogram of {column}")plt.xlabel(column)plt.ylabel('Density')plt.tight_layout()plt.show()# Example of how to use this function# Specify the columns for which you want to plot histogramscolumns_to_plot = ['X (40g)', 'Y (40g)', 'Z (40g)'] # Replace with your columns (e.g., 'Temperature')# Call the function to plot histograms with density lineplot_histograms(selected_endaq_df, columns_to_plot, bins=50)

Fig. 4. Histograms of vibration acceleration for X, Y, and Z directions.

When plotting a histogram, the bars show the frequency of data points within specific ranges (bins). The Kernel Density Estimate (KDE) line (red solid lines in the plots), however, is a smoothed estimate of the distribution of the data, providing a continuous curve that helps visualize the underlying probability distribution more clearly. In histograms, the vertical axis represents density, while the horizontal axis represents the data magnitude.

Considering the plots, it can be observed that the majority of Y and Z acceleration values fall within the ranges of (-4, 4) g. The X acceleration is confined to a more limited range, roughly around (-1, 2).

Theoretically, a machine learning model trained on a specific dataset performs well within the ranges of that data, as it has learned patterns based on those values. However, any unseen data outside of that range is unfamiliar to the model, making it challenging for the model to perform accurately on such data.

Statistical metrics

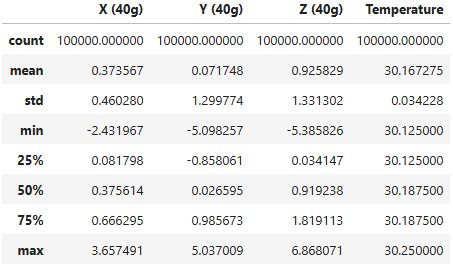

Statistical metrics such as mean, standard deviation, minimum, 25th percentile, 50th percentile (median), 75th percentile, and maximum values can provide quick and valuable insights into the data.

# Compute the statistics of the selected datastatistics = selected_endaq_df[['X (40g)', 'Y (40g)', 'Z (40g)', 'Temperature']].describe()# Print the statisticsdisplay(statistics)

Outlier

Removing outliers is a crucial step in data cleaning. An outlier is a data point that significantly deviates from other observations in a dataset, often lying far from the general trend or pattern. It may indicate variability or an anomaly in the data.

One of the simplest approaches for detecting outliers is using a boxplot. A boxplot identifies outliers based on the interquartile range (IQR). It displays the data's median, quartiles, and "whiskers" that extend up to 1.5 times the IQR from the quartiles. Data points outside this range, either above or below the whiskers, are considered outliers.

def plot_boxplots_with_outliers(df, columns, figsize=(12, 6)):"""Plots boxplots for the selected columns in the DataFrame to visualize outliers.Parameters:df (pandas.DataFrame): The DataFrame containing the data.columns (list): List of column names to plot boxplots for.figsize (tuple): The size of the plot."""# Calculate the number of rows needed (each row has 2 columns)rows = int(np.ceil(len(columns) / 2))# Set up the plotplt.figure(figsize=figsize)for i, column in enumerate(columns):plt.subplot(rows, 2, i+1) # Make sure to start at position i+1# Plot boxplot to visualize outlierssns.boxplot(x=df[column], color="skyblue", fliersize=5, linewidth=1.5)# Add title and labels for the plotplt.title(f"Boxplot of {column}")plt.xlabel(column)plt.tight_layout()plt.show()# Example of how to use this functioncolumns_to_plot = ['X (40g)', 'Y (40g)', 'Z (40g)', 'Temperature'] # Replace with your columns# Call the function to plot boxplots for detecting outliersplot_boxplots_with_outliers(selected_endaq_df, columns_to_plot)

Fig. 5. Box plot showing outliers in X, Y and Z acceleration, and Temperature.

Other outlier detection methods include:

- Z-score: Identifies outliers by calculating how many standard deviations a data point is from the mean, flagging extreme values.

- IQR (Interquartile Range): Flags data points outside the range defined by 1.5 times the IQR above the third quartile or below the first quartile.

- Isolation Forest: A machine learning algorithm that isolates anomalies by recursively partitioning the data, with outliers being more easily separated.

- DBSCAN (Density-Based Spatial Clustering of Applications with Noise): A clustering method that detects outliers as points that do not belong to any cluster.

- Local Outlier Factor (LOF): Identifies outliers by comparing the local density of points, detecting those that are in regions of lower density than their neighbors.

Feature Selection

Feature selection is the process of selecting the most important features (variables) from a dataset to build a model, aiming to improve its performance, reduce overfitting, and decrease computational cost.

Pearson and Spearman correlation analysis can be part of feature selection. These methods measure the relationship between features:

- Pearson correlation evaluates the linear relationship between two continuous variables.

- Spearman correlation assesses the monotonic relationship, which can be either linear or non-linear.

ρX,Y = 1 - (6Σdi2)/n(n2-1) (Eq. 2)

where X and Y are parameters, n is the number of pairs of measurements, d is the difference of the ith pair of ranking.

Features with low correlation to the target variable might be discarded during the feature selection process to improve model performance. In correlation analysis, a value of 1 indicates a perfect positive relationship, 0 means there is no relationship, and -1 represents a perfect reverse relationship.

In our data, the highest positive correlation is observed between X acceleration and Z acceleration (0.31 for both Pearson and Spearman) which indicates that by increasing X acceleration, Z acceleration increases as well. On the other hand, the correlation coefficient between the X and Y accelerations is negative, meaning that as acceleration increases in one direction, acceleration in the other direction decreases. The correlation between vibration and temperature is negligible due to the low temperature variance in our data and the fact that we collected such a small sample of data for this example it would be difficult to determine any correlations.

def calculate_correlations_with_heatmap(df, columns):"""Calculates the Pearson and Spearman correlation coefficients for the specified columns.Removes the upper-right triangle of the correlation matrix and adds a heatmap.Parameters:df (pandas.DataFrame): The DataFrame containing the data.columns (list): List of columns for which to calculate correlations."""# Select the data for the specified columnsselected_data = df[columns]# Pearson correlationpearson_corr = selected_data.corr(method='pearson')print("Pearson Correlation Matrix:")display(pearson_corr)print("\n")# Spearman correlationspearman_corr = selected_data.corr(method='spearman')print("Spearman Correlation Matrix:")display(spearman_corr)# Mask upper right triangle for both Pearson and Spearman matricesmask = np.triu(np.ones_like(pearson_corr, dtype=bool))# Plot Pearson correlation heatmapplt.figure(figsize=(10, 8))sns.heatmap(pearson_corr, annot=True, cmap='coolwarm', mask=mask, cbar=True, fmt='.2f', linewidths=1, square=True)plt.title('Pearson Correlation Heatmap')plt.show()# Plot Spearman correlation heatmapplt.figure(figsize=(10, 8))sns.heatmap(spearman_corr, annot=True, cmap='coolwarm', mask=mask, cbar=True, fmt='.2f', linewidths=1, square=True)plt.title('Spearman Correlation Heatmap')plt.show()# Example usagecolumns_to_analyze = ['X (40g)', 'Y (40g)', 'Z (40g)', 'Temperature'] # Replace with your columns# Call the function to calculate correlations and plot heatmapscalculate_correlations_with_heatmap(selected_endaq_df, columns_to_analyze)

Pearson correlation:

Spearman correlation:

Fig. 6. Pearson (top) and Spearman (bottom) coefficients for X, Y, Z, and temperature.

Other feature selection methods include:

- Chi-Square Test: Assesses the independence of categorical features by comparing observed and expected frequencies.

- Mutual Information: Measures the amount of information shared between two variables, capturing both linear and non-linear relationships.

- Recursive Feature Elimination (RFE): Iteratively removes the least important features based on model performance to improve accuracy.

- L1 Regularization (Lasso): Uses L1 regularization to shrink coefficients of less important features to zero, effectively performing feature selection.

- ANOVA F-test: Compares the variances within groups for each feature and selects those that show significant differences.

- Tree-based methods (e.g., Random Forest): Uses decision tree algorithms to rank features based on their importance in predicting the target variable.

Filtering Data to Remove Noise

Noise removal methods aim to reduce random fluctuations or errors in data. Common methods include moving average, Median filters, Savitzky-Golay Filter, Wavelet Transform, Exponential Moving Average (EMA), and Gaussian smoothing. EnDAQ offers a Butterworth filter through the function endaq.calc.filters.butterworth, which is explained in detail here. Among these, the moving average is one of the most common due to its simplicity and effectiveness in smoothing time-series data.

The Moving Average (MA) method smooths time-series data by averaging data points within a specified window, typically a fixed number of neighboring values. It reduces noise by dampening short-term fluctuations, making long-term trends clearer.

# Ensure that selected_endaq_df is a copy if necessaryselected_endaq_ma_df = selected_endaq_df.copy() # Make a copy if selected_endaq_df might be a view# Apply a moving average method to the 'X (40g)', 'Y (40g)', and 'Z (40g)' columns for smoothingwindow_size = 5 # Define the size of the moving average window (e.g., 5 data points)# Apply rolling mean to each of the columnsselected_endaq_ma_df['X (40g)'] = selected_endaq_ma_df['X (40g)'].rolling(window=window_size, min_periods=1).mean()selected_endaq_ma_df['Y (40g)'] = selected_endaq_ma_df['Y (40g)'].rolling(window=window_size, min_periods=1).mean()selected_endaq_ma_df['Z (40g)'] = selected_endaq_ma_df['Z (40g)'].rolling(window=window_size, min_periods=1).mean()# Create the new filtered DataFrame with smoothed values for all three axesfiltered_endaq_ma_df = selected_endaq_ma_df.copy()

# Verify the filtered DataFramefiltered_endaq_ma_df

The raw and filtered data by moving average are compared in the plot below.

import plotly.graph_objects as go

from plotly.subplots import make_subplots

# Assuming the data is already downsampled by 10 seconds

selected_endaq_df_downsampled = selected_endaq_df.resample('10S').mean()

filtered_endaq_ma_df_downsampled = filtered_endaq_ma_df.resample('10S').mean()

# Create a 3x1 subplot (3 rows, 1 column) in Plotly

fig = make_subplots(rows=3, cols=1, shared_xaxes=True, vertical_spacing=0.1,

subplot_titles=("X (40g) Time Series Plot", "Y (40g) Time Series Plot", "Z (40g) Time Series Plot"))

# Plot 'X (40g)' column in the first subplot (row 1, column 1)

fig.add_trace(go.Scatter(x=selected_endaq_df.index, y=selected_endaq_df["X (40g)"],

mode='lines', name="X (40g) - Original", line=dict(color='blue', width=1.2)),

row=1, col=1)

fig.add_trace(go.Scatter(x=filtered_endaq_ma_df.index, y=filtered_endaq_ma_df["X (40g)"],

mode='lines', name="X (40g) - Filtered (MA)", line=dict(color='red', dash='dash', width=1.2)),

row=1, col=1)

# Plot 'Y (40g)' column in the second subplot (row 2, column 1)

fig.add_trace(go.Scatter(x=selected_endaq_df.index, y=selected_endaq_df["Y (40g)"],

mode='lines', name="Y (40g) - Original", line=dict(color='orange', width=1.2)),

row=2, col=1)

fig.add_trace(go.Scatter(x=filtered_endaq_ma_df.index, y=filtered_endaq_ma_df["Y (40g)"],

mode='lines', name="Y (40g) - Filtered (MA)", line=dict(color='purple', dash='dash', width=1.2)),

row=2, col=1)

# Plot 'Z (40g)' column in the third subplot (row 3, column 1)

fig.add_trace(go.Scatter(x=selected_endaq_df.index, y=selected_endaq_df["Z (40g)"],

mode='lines', name="Z (40g) - Original", line=dict(color='green', width=1.2)),

row=3, col=1)

fig.add_trace(go.Scatter(x=filtered_endaq_ma_df.index, y=filtered_endaq_ma_df["Z (40g)"],

mode='lines', name="Z (40g) - Filtered (MA)", line=dict(color='purple', dash='dash', width=1.2)),

row=3, col=1)

# Update layout (titles, labels, and grid)

fig.update_layout(

title="Time Series of Acceleration (X, Y, Z)",

xaxis_title="Timestamp",

yaxis_title="Acceleration",

height=900, # Adjust overall figure height

showlegend=True,

plot_bgcolor="white",

xaxis=dict(showgrid=True), # Show grid on x-axis

yaxis=dict(showgrid=True), # Show grid on y-axis

template="plotly" # You can also customize the template

)

# Show the plot

fig.show()

Fig. 7. Original and filtered vibrations of X, Y, and Z directions using Moving Average method.

Fig. 8. Original and filtered vibrations of X, Y, and Z directions using Moving Average method (Zoomed-in).

You might be wondering, "Why don't we define a threshold for noise removal?" Defining a threshold for noise removal can be also effective, especially when the noise has high amplitude or is easily distinguishable from the signal. It involves setting a cutoff value, and any data points exceeding this threshold are removed or altered. While simple, this method may lead to data loss if the threshold is too strict, or the noise is subtle. It works best when noise is clearly separated from the signal, but it may not be suitable for all types of noise, especially low-amplitude or similar-frequency noise. Combining thresholding with other techniques can improve results.

There can be confusion between noise and outliers, as they are related but not the same. Noise refers to random, unpredictable variations or errors in the data that can obscure the true signal, while outliers are experiment results that differ significantly from the mean, often indicating a large deviation from the general trend or pattern. Outliers can be considered a form of noise when they result from errors or anomalies in data collection, but not all noise consists of outliers. Noise can be more subtle and may not always manifest as extreme values.

Fig. 9. Difference between outlier and noise.

FFT analysis – Key Features From Vibration

As mentioned previously, vibration data can offer unique system insights. One of the primary methods for extracting these key insights is by using the Fast Fourier Transform (FFT). FFT is an algorithm used to compute the Discrete Fourier Transform (DFT) efficiently, converting a time-domain signal into its frequency-domain representation. It breaks down a signal into its constituent frequencies, making it useful for analyzing periodic components and identifying noise or trends in data. For additional information, check out the blog on Vibration Analysis: Fourier Transform, Power Spectral Density, and Aggregate FFT.

Key parameters of FFT include:

- Sampling Rate (fs): The rate at which the signal is sampled, affecting the frequency resolution and range.

- Window Size (N): The number of data points used in the FFT; a larger window gives better frequency resolution.

- Frequency Resolution: Determined by fs/N, it defines the smallest frequency difference that can be detected.

- Frequency Range: The highest detectable frequency is fs/2 (Nyquist frequency), and the lowest is determined by the signal length.

FFT is widely used in signal processing, audio analysis, and noise removal.

In signal processing, the following techniques are used to improve the analysis of signals, especially in FFT and spectral analysis:

- Windowing: This technique applies a window function (e.g., Hamming, Hanning, or Blackman-Harris) to a segment of the signal before applying the FFT. Windowing reduces spectral leakage by tapering the signal at the boundaries, which minimizes discontinuities. The window function helps isolate a portion of the signal and reduces distortion caused by abrupt edges.

- Averaging: Averaging involves computing the FFT of multiple segments of a signal and then averaging the results. This reduces variance and noise in the frequency domain, resulting in a smoother and more reliable spectrum. Techniques like power spectral averaging are commonly used to enhance the accuracy of frequency analysis, particularly when dealing with noisy signals.

- Overlapping: In this method, successive segments of the signal overlap, typically by 50% or more. This ensures that the entire signal is analyzed while minimizing the loss of information at the segment boundaries. Overlapping is often combined with windowing to provide continuous, high-resolution analysis of signals, especially in real-time processing or when dealing with time-varying signals.

Together, these techniques improve the accuracy and quality of frequency-domain analysis, particularly for noisy or complex signals.

Let’s apply the FFT to the X, Y, and Z acceleration data:

from scipy.fft import fft# Parameters for FFT processingwindowing = False # Apply windowing (Hanning window)averaging = True # Apply averaging (to smooth the FFT results)overlap = False # Apply overlapping windowswindow_size = 256 # Size of the window for FFT analysisoverlap_factor = 0.5 # Overlap factor (between 0 and 1)fs = 4052.60 # Sampling frequency (can adjust based on your data)# Function to apply FFT with optional windowing, averaging, and overlappingdef apply_fft(df, column, windowing=True, averaging=False, overlap=False, window_size=256, overlap_factor=0.5, fs=1.0):"""Apply FFT to the specified column of the DataFrame with optional windowing, averaging, and overlapping.Parameters:- df: DataFrame containing the acceleration data.- column: The column name (e.g., "X (40g)", "Y (40g)", "Z (40g)").- windowing: If True, apply windowing (Hanning window).- averaging: If True, apply moving average smoothing to the FFT results.- overlap: If True, perform overlapping FFT with a specific overlap factor.- window_size: Size of the window for FFT analysis.- overlap_factor: Factor for overlap (between 0 and 1).- fs: Sampling frequency (default is 1.0, meaning data is already in time units).Returns:- f: Frequencies of the FFT result.- fft_result: The FFT magnitudes for each window or the entire signal."""n = len(df)if overlap or windowing:step = int(window_size * (1 - overlap_factor)) if overlap else window_sizef = np.fft.fftfreq(window_size, 1/fs)[:window_size//2] # Frequency axisfft_results = []for start in range(0, n - window_size + 1, step):segment = df[column].iloc[start:start + window_size].valuesif windowing:segment = segment * np.hanning(window_size) # Apply Hanning windowfft_segment = fft(segment)fft_magnitude = np.abs(fft_segment[:window_size // 2]) # Take positive frequenciesfft_results.append(fft_magnitude)fft_results = np.array(fft_results)if averaging:fft_results = np.mean(fft_results, axis=0) # Average across all windowsfft_results = np.expand_dims(fft_results, axis=0) # Ensure it's a 2D array for plottingelse:# Compute the FFT for the entire signal when no windowing or overlap is appliedsegment = df[column].valuesfft_segment = fft(segment)f = np.fft.fftfreq(n, 1/fs)[:n//2] # Frequency axisfft_results = np.abs(fft_segment[:n // 2]) # Positive frequenciesif averaging:# In case of no windowing, we apply averaging across the entire signalfft_results = np.expand_dims(fft_results, axis=0) # Ensure it's a 2D array for plottingreturn f, fft_results# Example Usage# Apply FFT to X, Y, Z with the set optionsf_x, fft_x = apply_fft(selected_endaq_df, "X (40g)", windowing=windowing, averaging=averaging, overlap=overlap,window_size=window_size, overlap_factor=overlap_factor, fs=fs)f_y, fft_y = apply_fft(selected_endaq_df, "Y (40g)", windowing=windowing, averaging=averaging, overlap=overlap,window_size=window_size, overlap_factor=overlap_factor, fs=fs)f_z, fft_z = apply_fft(selected_endaq_df, "Z (40g)", windowing=windowing, averaging=averaging, overlap=overlap,window_size=window_size, overlap_factor=overlap_factor, fs=fs)# Plotting the FFT results using Plotly# Create subplots for the three FFT resultsfig = go.Figure()# Add FFT result for X (40g)fig.add_trace(go.Scatter(x=f_x, y=fft_x.flatten(), mode='lines', name='FFT of X (40g)', line=dict(color='blue')))# Add FFT result for Y (40g)fig.add_trace(go.Scatter(x=f_y, y=fft_y.flatten(), mode='lines', name='FFT of Y (40g)', line=dict(color='orange')))# Add FFT result for Z (40g)fig.add_trace(go.Scatter(x=f_z, y=fft_z.flatten(), mode='lines', name='FFT of Z (40g)', line=dict(color='green')))# Update layout for the plotfig.update_layout(title="FFT of Acceleration Data (X, Y, Z)",xaxis_title="Frequency (Hz)",yaxis_title="Magnitude",legend_title="Channels",template="plotly", # Use the default white backgroundplot_bgcolor='white', # Set plot background to whitepaper_bgcolor='white', # Set paper background to whiteheight=800,showlegend=True)# Show the plotfig.show()

Fig. 10. FFT results on acceleration of X, Y, and Z directions.

Note that EnDAQ provides a function for performing analysis using endaq.calc.fft.aggregate_fft(df, **kwargs). For more details, please refer to the EnDAQ 1.5.3 documentation.

The following information can be derived from FFT:

- The highest peak in the FFT represents the dominant frequency in the signal. This frequency corresponds to the main oscillation or periodic component of the data. In practical terms, it indicates the primary frequency at which the system or signal is vibrating or oscillating. The amplitude of this peak shows the strength or intensity of that frequency. A higher peak means that the signal contains a stronger component at that frequency.

- Harmonics are integer multiples of the fundamental frequency (the frequency of the highest peak). If the fundamental frequency is f0, the first harmonic is at 2f0, the second harmonic is at 3f0, and so on. Harmonics occur when the system is nonlinear, or when there is a periodic signal with more than one frequency component. In the case of vibrations, harmonics often appear when there are mechanical resonances or distortions in the system. The presence and amplitude of harmonics provide information about the complexity or the nature of the system's behavior. Significant harmonics can indicate non-ideal or nonlinear behavior in the system, such as mechanical faults (e.g., imbalance, misalignment) or resonances.

- Structural Resonances can appear in the data and indicate where the natural frequencies of the structure. These natural frequencies can be influenced by the systems boundary conditions and other factors such as: loosening bolts, material loss due to corrosion, added mass due to ice build up and the like.

- Noise can appear as smaller peaks throughout the FFT result, and these may not represent significant frequency components. Filtering or windowing techniques can help mitigate noise effects. Spurious peaks can occur due to artifacts in the data, such as poor sampling or non-periodic noise. It's important to distinguish between real frequency components and these artifacts.

To extract meaningful features from FFT results for machine learning (ML), a peak-finding routine is essential. This routine identifies dominant peaks in the frequency spectrum, which represent the primary frequencies present in the signal. Algorithms like find_peaks in Python can help detect these local maximums efficiently.

Let's find the FFT peak of acceleration in Y direction:

import numpy as npfrom scipy.signal import find_peaksimport plotly.graph_objects as go# Apply FFT to Y acceleration data (use the existing apply_fft function)f_y, fft_y = apply_fft(selected_endaq_df, "Y (40g)", windowing=windowing, averaging=averaging, overlap=overlap,window_size=window_size, overlap_factor=overlap_factor, fs=fs)# Find the index of the maximum peak in the FFT magnitudemax_peak_index = np.argmax(fft_y.flatten())# Get the frequency and magnitude of the maximum peakmax_peak_freq = f_y[max_peak_index]max_peak_mag = fft_y.flatten()[max_peak_index]# Create a plot of the FFT resultfig = go.Figure()# Add the FFT plotfig.add_trace(go.Scatter(x=f_y, y=fft_y.flatten(), mode='lines', name='FFT of Y (40g)', line=dict(color='orange')))# Mark the maximum peak with a circle markerfig.add_trace(go.Scatter(x=[max_peak_freq],y=[max_peak_mag],mode='markers',name='Maximum Peak',marker=dict(color='red', size=10, symbol='circle')))# Update the layout for the plotfig.update_layout(title="FFT of Y Acceleration with Maximum Peak Marked",xaxis_title="Frequency (Hz)",yaxis_title="Magnitude",legend_title="Channels",template="plotly", # Use the default white backgroundplot_bgcolor='white', # Set plot background to whitepaper_bgcolor='white', # Set paper background to whiteheight=800,showlegend=True)# Show the plotfig.show()

As displayed in Figure 11, the FFT peak is detected by find_peaks function:

Fig. 11. Finding FFT peak for Y acceleration.

Once the peaks are identified, key features such as frequency, amplitude, peak width, and peak power can be extracted. These features are valuable for creating feature vectors, which serve as structured representations of the signal’s characteristics that ML models can use for further analysis.

These extracted features can be fed into ML models for tasks like classification or anomaly detection. By converting raw FFT data into structured features, the machine learning algorithm can better predict system behavior, detect faults, or identify patterns in the signal.

Summary

In this blog, we covered the essential steps for preparing vibration sensor data for machine learning, providing practical techniques to improve model performance.

We explored key topics including tools and libraries, reading and converting data formats, resampling, data visualization, and analysis (such as histograms, statistical metrics, and outlier detection).

Additionally, we discussed feature selection, noise filtering, and the application of FFT analysis to extract meaningful insights from the vibration data.

By following these steps, you'll be well-equipped to handle vibration sensor data and apply it effectively in machine learning projects.

In the upcoming blog, we will demonstrate how vibration data and FFT can be utilized for anomaly detection and fault classification.

Resources, Scripts & Files