Building a Machine Learning Model with a Vibration Sensor

Machine learning (ML) is a branch of artificial intelligence that focuses on the development of statistical algorithms that can learn from data and generalize unseen data to perform specific tasks without explicit instructions. For example, we can use machine learning algorithms to;

- Predict patient outcomes based on medical records

- Recommend products to customers based on past behavior and preferences

- Predict machine failure by analyzing sensor data

Building on our previous discussion about anomaly detection (Building an Anomaly Detection Model in Python), this post explores the development of a machine-learning model using data collected from a high-quality vibration sensor.

In this post, we will go through the steps of building a machine-learning model using data collected from a vibration sensor (for full disclosure, I will be using an enDAQ S5-R2000D40 sensor, but most high-quality data sensors will suffice). In particular, we will build a model that performs anomaly detection: the identification of rare items, events, or observations that exhibit non-standard or abnormal behavior, in that they deviate significantly or are inconsistent with the majority of the data.

Anomaly detection has various applications in domains such as cybersecurity, law enforcement, finance, and manufacturing. We will consider the following use case: the identification of structural changes from a machine (e.g. equipment or operational failures), which is often referred to as predictive or condition-based maintenance. To illustrate this, we’ve collected data attached to the top of a box fan, and we’ll go through the steps of;

Setup

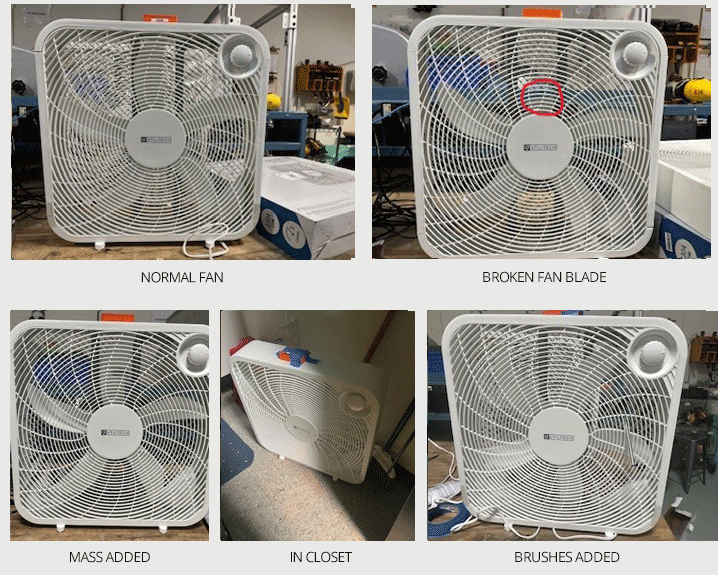

Attaching a high-quality data sensor to the top of a box fan, several recordings were taken from a box fan under different conditions. For the trained anomaly detection model, we will consider the following scenarios:

- A box fan with all blades intact

- A broken blade

- A mass attached to a blade

- Inside a closet (with less airflow

- A brush obstructing the blades.

Note, however, that the process and development of the model can be generalized to different situations, as well as other use cases.

Here, we will say the data that comes from the intact fan as “normal”, and those that comes from the modified fans as “anomalies”. Overall, the model will be trained to accurately differentiate between the data that comes from these different fans.

Machine Learning Basics

In machine learning, we develop models, a program that finds patterns from data and then makes decisions on unseen data. Model development can be summarized in 3 stages:

-

Preprocessing: In this stage, we take raw data and transform it into a suitable format for the model. Since machines can’t directly read text or images, the input must be encoded into numbers, so we represent data as vectors and matrices.

-

Training: During model training, we feed engineered data (called training data) to a parameterized machine learning algorithm, which finds patterns in the training data and outputs a ML model that minimizes an objective or cost function, which evaluates how well the model performs on an engineered dataset.

-

Evaluation/Testing: Once the model has been trained, we can test and evaluate its performance on test data that it did not see during the training stage.

For anomaly detection, there are 3 general categories of techniques that exist:

-

Supervised learning techniques: These require a dataset that contains labels of data points as “normal” and “abnormal” and involves training a classifier that differentiates normal data points from outliers.

-

Semi-supervised techniques: These assume a portion of the dataset is labeled.

-

Unsupervised techniques: These assume that the data is unlabeled.

In many use cases, labeled data is unavailable, which makes unsupervised approaches the most commonly used and viable.

For unsupervised anomaly detection, we’ll typically use one of the following models:

-

Proximity-based models: These models define a data point as an anomaly when locally it is sparsely populated (i.e. there are not many points “near it” depending on how we define distance).

-

One-class Support Vector Machines (OC-SVM): OC-SVM constructs an optimal hyperplane that separates normal data in a high-dimensional kernel space by maximizing the margin between the kernel and hyperplane. It has been developed for anomaly detection in aviation, but tends to perform poorly on short-duration anomalies and can have large computational complexity.

-

Deep learning models: These models come in the form of a particular type of neural network called an autoencoder, which consists of two parts:

-

An encoder transforms the original data into a smaller abstract representation, referred to as a latent space. The latent space is an abstract multi-dimensional space in which items that resemble each other are closer to one another.

-

The decoder turns the abstract representation back into the original input by sampling from the latent space.

-

In particular, we’ll take the third approach and go through the steps of building an autoencoder.

The output from the autoencoder is the reconstructed input. The difference between the reconstructed input and the original input is referred to as reconstruction error, which is a metric that summarizes how far points in the reconstructed input fall from points in the original input.

There are multiple options for how we can define reconstruction error. For instance, we could define reconstruction error to be the mean absolute error between the original input and the reconstruct input. So, if we had a time series X and called its reconstructed version R, reconstruction error could be computed as the mean of the absolute differences between each point in X and the corresponding points in R.

Here, we’ll define reconstruction error to be the mean squared error (MSE, or the averaged of the squared differences between X and R.

The autoencoder will be trained solely on data that is considered “normal”. So, we will expect the model to reconstruct normal data well with low reconstruction error. On the other hand, data that does not come from the distribution of normal data (e.g. anomalies) should be reconstructed poorly with high reconstruction error. We can then effectively use reconstruction error as an anomaly score to differentiate normal points from outliers.

LSTM Autoencoder

Neural networks consist of layers, which are a collection of nodes that operate at a particular depth in the network. The first layer in the neural network is the input layer, which contains the original data.

Then, a neural network will have have a series of hidden layers, where each layer attempts to learn different aspects about the data by minimizing an objective function.

In particular, an autoencoder contains a bottleneck layer, which serves as the latent space and encodes higher-dimensional data into a reduced dimensional-format. This layer separates the encoder from the decoder.

The last layer is the output layer, which contains the output from the neural network.

A neural network may consist of layers of different types, that contain a different number of nodes and are instantiated with a different activation function, which maps input values to a known range to help stabilize training. The collection of layers used is referred to as the architecture of the model.

For this use case, we’ll use a specific type of autoencoder called a Long Short-Term Memory (LSTM) autoencoder, which are designed to support sequences of input data.

We’ll represent the data we collect from the fans as time series. Other representations of the data could be used; for example, we could represent each data point as a collection of features, measurable characteristics such as RMS of acceleration, average temperature, gyroscope orientation, etc. Distinct data representations can convey different information about the data and impact the computational complexity of the model (e.g. data representations that require more space can result in a model that is slower to train or make predictions).

LSTM autoencoders are well suited for time series, since they are capable of learning the dynamics of the temporal ordering of input sequences and can support inputs of varying lengths. LSTM autoencoders consist of LSTM layers, which learn long-term dependencies between time steps in sequence data.

Building the Model

The raw data comes in the form of IDE files. From the IDE file, we’ll represent sensor data as a multivariate time series in the form of an n x d matrix, where n is the length of the series and d is the number of features (i.e. sensors) we incorporate into the model. For instance, if we decide to consider acceleration along each axis as well as orientation, the data will be represented as n x 4 matrix, where each row represents the readings taken from each sensor at a particular point in time.

An LSTM autoencoder expects the length of its input sequences to be the same length. To handle time series of varying lengths that come from different recordings, we split the original series into multiple consecutive series of the same length. Suppose, we define the model to accept sequences of length t. We can take the original n x d matrix, and transform it into a matrix of shape N x t x d, where N is the number of consecutive t-length time series in the full-length series.

In machine learning, we often split the original dataset into a train and validation set. The t-length sequences that fall in each set are chosen randomly, but we usually take the training set to be larger than the validation set (e.g. a 80/20 split is common).

During training, the model we only see points from the training set. Then, after we have trained the model, we’ll evaluate its performance on data that it did not see, such as the validation set.

We’ll treat the validation set as data that comes from the same distribution as the training data, which is considered to be “normal”. Here, a good model should reconstruct inputs from the training and validation sets well. We’ll also have test sets that the we consider to be anomalous, which we’ll take from modified fans. We’ll expect a good model to reconstruct inputs from these test sets poorly.

To preprocess the data, we’ll use primarily use the endaq, NumPy, and pandas Python libraries. To develop the model, we’ll use TensorFlow, an open-source library for machine learning and artificial intelligence.

Summary

This wraps up our two-part series on leveraging machine learning for anomaly detection (Part 1: Building an Anomaly Detection Model in Python). By integrating advanced models with sensor data, you can proactively monitor equipment health, predict failures, and optimize operations in a range of industries. As we continue to innovate, these techniques will become essential for maintaining efficiency and reliability in complex systems.

Related Posts